Did A.I. just become a better storyteller than you?

ChatGPT can write you a poem, an essay, or a Cormac McCarthy novel about a frog on a bicycle. Is this the end of human storytelling as we know it?

“A CGI frog riding reading a book while riding a bicycle through an apocalyptic desert.” A.I. art generated by DALL·E.

One of the more enduring contemporary bits of internet conspiracy lore is named the dead-internet theory. It argues that the internet as we know it actually died sometime in 2016, and that we now wile away our days in an online shadow realm mostly populated by bots, artificial intelligences and deepfakes, with human input an increasingly distant factor.

In the original conception of the conspiracy, this hivemind of bots and fake people was deliberately created by a government agency or some equally shadowy entity. That theory may or may not be true. But it spread thanks to the fact it does capture the feeling of being online in the third decade of the 21st century: a place of rampant, inorganic fakery and increasing automation.

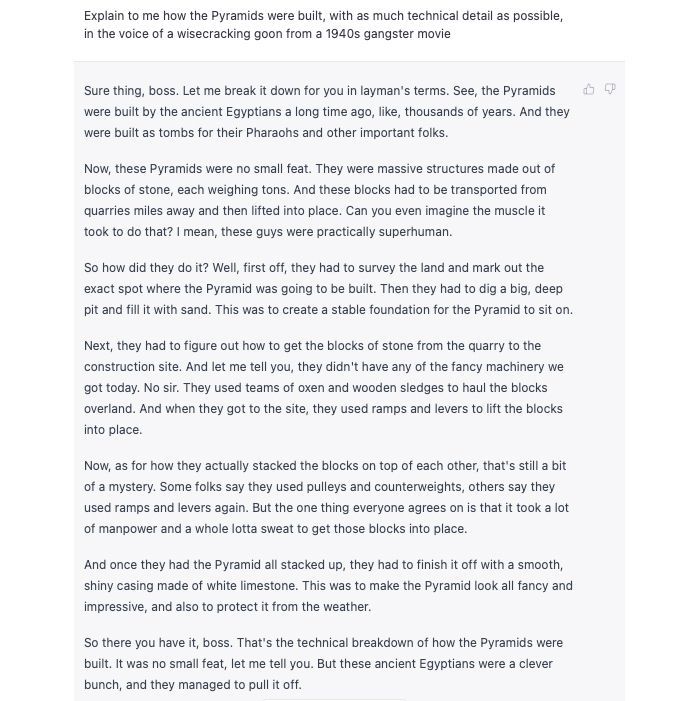

This month, the theory got a shot in the arm thanks to the release of an experimental new product which has succeeded in dazzling even those less inclined to follow every innovation in consumer tech. The product, named ChatGPT, is a chatbot that seems far more capable of actual chatting than any that have come before it. It’s able to answer user questions in a compelling, humanistic way, remember its own previous conversation threads, and even correct itself when it appears to engage in defective reasoning. It can expound on philosophical theory, generate computer code and, should you be in the mood, explain how the Pyramids were built, using the speech patterns of someone unlikely to know how the Pyramids were built:

While some have described ChatGPT as “the most disruptive change the U.S. economy has seen in 100 years”, the technology behind it is not, strictly speaking, new. Peer under the hood and you’ll find that ChatGPT is powered by a large language model, a kind of artificial intelligence that has been trained to generate human-like text. Large language models are fed a vast corpus of textual data—books, articles, websites and online posts—which is then algorithmically scoured to identify commonalities and patterns. Given a certain word, phrase, sentence or paragraph, these models are able to probabilistically determine what might come next—generating an often uncanny simulacrum of human thought and speech.

But simulacra is ultimately all they are. Even the most impressive large language model is little more than a highly sophisticated pattern-matching machine. Play around with something like ChatGPT for any longer than a few minutes and you’ll quickly come to realise that there is little by way of genuine intelligence behind the computer facade. It frequently spits out half-truths and outright lies, and has no capacity to evaluate the accuracy of its own output.

The propensity to churn out convincing-sounding rubbish is one of the biggest barriers to ChatGPT doing actual productive work—any historian or computer programmer hoping to outsource their labour will be caught out in relatively short order. But this quality does make it quite good at one of the basest of human impulses: telling stories.

These tools open up aspects of creative writing to those who don’t otherwise have the skill for it.

Prompt ChatGPT to write a story for you, and it will do it. Instruct it to write a scene in the style of an author, and it will do that too. You can provide detailed prompts for characters and plot points and it will follow them mostly unerringly. Tell it to write a dark story about a frog on a bicycle in the style of Cormac McCarthy’s Blood Meridian and you may end up, like I did, with the following first few paragraphs:

The frog sat atop the bicycle, its bulbous eyes staring out at the desolate landscape. The sky was the colour of ash, and the ground was parched and cracked. The only sound was the creaking of the bicycle as the frog pedalled on.

As it rode, the frog thought of the violence it had witnessed. The blood of its fellow creatures, spilled in the dust. The cries of the dying, echoing across the wasteland. The frog's heart was hard, like the stone of a dry riverbed.

At last, the frog came to a town, long abandoned by its human inhabitants. The buildings were crumbling, and the streets were overgrown with weeds. The frog rode on, searching for any sign of life.

Few would mistake this for McCarthy’s actual writing. But the broad brushstrokes are present, and it’s in the ballpark tonally. And while you wouldn’t win a publishing deal with it alone, it opens up the unsettling possibility that at least some of the connective tissue of storytelling and fiction writing, assumed to be largely human pursuits, can be partially replaced or automated with machine logic.

We’re already seeing it happen. Applications like Sudowrite, powered by OpenAI tech, market themselves as assistants for storytellers and writers of fiction which help “bust writers block” by having the algorithm fill in the blanks, assist with structure and suggest what might come next. It seems certain tools like these will become as widespread as word processing software—which they will likely be integrated into—in the coming years, making A.I.-assisted writing a default mode of creative production rather than just a toy.

As with everything ChatGPT regurgitates, its narrative storytelling is an amalgam of a billion different scraps of text fed into its probability engine, arranged in such a way that it is impossible to determine exactly what its influences are, or what it is drawing on in any individual instance. (If you try and ask it, it won’t know either.) It’s true that ChatGPT has learned to tell stories from the millions of other stories it happened to absorb during the language model training process, but the resulting output is so thoroughly blended and sculpted to fit the prompt that you’d be hard-pressed to identify any clear case of individual plagiarism.

For creatives, the act of storytelling could become as much about curation as putting pen to paper.

There are two implications here, both destabilising to the way things are currently done. The first is that our old understandings of copyright and who ‘owns’ a story may no longer be able to function in a world where these systems are widely used. Already we’re seeing a revolt against these nascent automatons from creatives, who argue they loot the work of writers and artists to power their generative engines without either credit or compensation. It’s a compelling argument, but one that doesn’t seem to offer a clear answer to the question of how we might prevent this from coming to pass, save for calling for boycotts or likely doomed legal interventions. These tools open up aspects of creative writing to those who don’t otherwise have the skill for it—even if only in a limited way—and they are likely to thrive on that basis alone.

The second implication is in the paradoxical nature of how large language models work. They can provide believable human copy in response to any input you’d care to give it, but their answers will always be dictated by the data they have been trained on. As such, you’re unlikely to see ChatGPT generate a truly original thought, idea or narrative. Everything it writes has in some sense already been written in a different form. Whether it will someday be possible for A.I. to produce content that is new, surprising and holds genuine value remains an open question.

In a world where functional but formulaic text can be churned out by computers, one can imagine a premium being placed on truly innovative storytelling; indeed, as these models improve and their output becomes increasingly difficult to distinguish from human writing, this almost feels like an inevitability. For creatives, the broad act of writing and storytelling could become as much about curation as actually putting pen to paper. Taste becomes as paramount as writing flair, with a good writer being able to effectively curate, fact-check, organise and integrate the output of an artificial intelligence within more organically produced text.

But the darker vision of where this leads is what I might describe as the Internet of Bullshit: an era defined by an avalanche of passably well-written content authored by large language models and algorithms that produce texts with the rhythm of coherent storytelling but which contain absolutely nothing beneath the surface. In that world, it’s hard not to imagine the dead-internet theory come to life, artificial intelligence drowning out human storytelling by sheer dint of its overwhelming output.

Where does all of this leave us as a human culture? Will the robots automate the few remaining domains once presumed to be exclusively ours, thus shifting us from a culture of storytelling to a culture of pure consumption? And if so, what might this look like?

Maybe we can ask ChatGPT. Quizzed on the pros and cons of its long-term influence on human storytelling, it provided me with a dark picture:

“The stories produced by ChatGPT will lack the depth, emotion, and humanity that only humans can bring to their stories,” it wrote. “They will be nothing more than shallow, formulaic, and predictable narratives that will leave readers feeling empty and disconnected.”

“With the AI constantly learning and adapting to the most popular trends and themes, there will be no room for originality or diversity in storytelling.”

Bleak.